In recent years, mediation analysis has become a popular means to identify and quantify pathways linking an exposure to an outcome, thereby elucidating how a particular exposure contributes to the occurrence of a specific outcome. When a mediator is a modifiable risk factor, this opens up new opportunities for interventions to block (part) of the exposure’s effect on the outcome. Recent examples in Scandinavian Journal of Work Environment Health have addressed the mediating effect of wellbeing on the association between type of office and job satisfaction (1) and examined whether workplace social capital contributes to the association between organizational changes and employee exit from work (2).

Mediation analysis requires a specific study design ensuring that the temporal sequence of exposure, mediator, and outcome supports the argument for causation. A good illustration presented by Halonen and colleagues (3) used the Swedish Longitudinal Occupational Survey of Health (SLOSH) with biannual waves to investigate whether depressive symptoms mediated the association between effort–reward imbalance and subsequent neck-shoulder pain. The longitudinal design allowed for a mediation analysis whereby exposure was measured two years before the mediator, and the mediator was assessed two years before the outcome of interest. When exposure and mediator are self-reported, without assurance that the mediator occurred after the exposure (eg, years of education in school preceding exposure to working conditions), mediation analysis is prone to reversed causality (ie, not the exposure causing the mediator but the mediator causing the exposure) and has little to offer in terms of a better understanding of the mechanism how exposure is linked to the outcome. A linked issue is the stability of the mediator over time. Some authors have argued that mediation analysis is bound to fail in studies with a time window of measurements that does not sufficiently capture the fluctuations of the potential mediator over time (4).

Recent debates on mediation analysis have specifically addressed the methodology and assumptions that allow for a causal interpretation. The traditional approach to mediation analysis in health research has largely relied on the influential 1986 paper of Baron & Kenny (5). In this approach, mediation is assessed by estimating the effect of the exposure on the mediator and the effect of the mediator on the outcome (adjusting for the exposure), and multiplying the two estimates to derive the indirect or mediated effect (the “product-of-coefficients” approach). Alternatively, the effect of the exposure on the outcome is estimated with and without adjustment for the mediator, and the difference between the two estimates is used to quantify the mediated effect (the “difference-of-coefficients” approach). What has been largely overlooked, however, is the fact that these approaches are only valid in linear models and rely on the assumption that there is no interaction between exposure and mediator on the outcome (6). Moreover, because the importance of controlling for mediator-outcome confounders was never mentioned in Baron & Kenny, many studies have neglected to account for potential confounding factors of the mediator-outcome relationship. An eloquent illustration of the problems of the classical mediation method and the risk of severe bias in the mediated effect is presented by Pearce & Vandenbroucke (7).

In response to the limitations of traditional mediation methods, a new mediation methodology is rapidly developing and often referred to as “causal mediation analysis” (6, 8). This methodology has derived counterfactual definitions of direct and indirect effects, which are independent of any model, and – under explicitly stated assumptions – allow for a causal interpretation of the estimated effects (9). These definitions enable greater flexibility and provide researchers with the tools to scrutinize the assumptions underlying mediation analysis better. The causal inference approach to mediation has given rise to two different types of effects: (i) natural direct and indirect effects, and (ii) controlled direct effects. Natural direct and indirect effects by definition always sum up to the total effect, even in the presence of exposure–mediator interaction. Intuitively, the natural direct effect captures the effect of the exposure on the outcome that is not due to its effect on the mediator, whereas the natural indirect effect captures the effect of the exposure on the outcome that is due to its effect on the mediator (8). Controlled direct effects, on the other hand, quantify the effect of the exposure on the outcome if the mediator was fixed at a specific value uniformly in the population. Consequently, whereas a total effect can always be decomposed into a natural direct and indirect effect, controlled direct effects are estimated for every level of the mediator (which may differ substantially depending on the magnitude of the interaction effect between exposure and mediator).

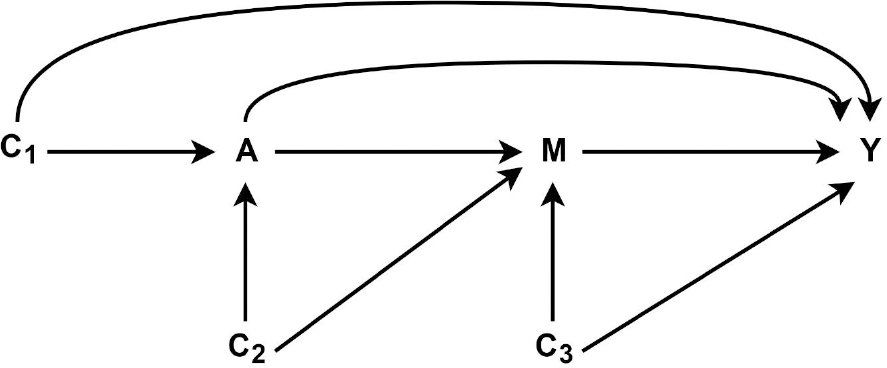

Decomposition of a total effect of an exposure on an outcome into natural direct and indirect effects requires that there is no unmeasured confounding of the (i) exposure–outcome, (ii) exposure–mediator, and (iii) mediator–outcome relationships. Furthermore, a critical assumption is (iv) that there are no measured or unmeasured mediator–outcome confounders that are themselves affected by the exposure (6). The first three assumptions are depicted in Figure 1: if C1, C2 and C3 include all relevant confounders of the three pathways under study (A→Y, A→M, and M→Y), the first three assumptions are met.

Figure 1

Generic directed acyclic graph for mediation analysis. A=exposure, M=mediator, Y=outcome, C1=exposure–outcome confounder, C2=exposure–mediator confounder, C3=mediator–outcome confounder.

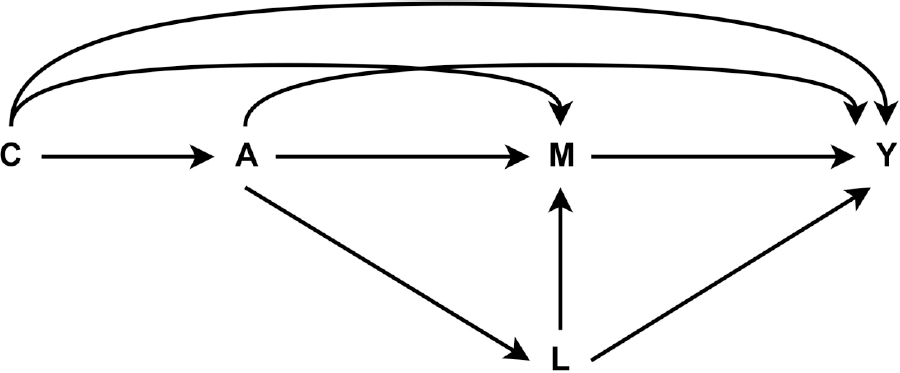

The critical fourth assumption that the mediator–outcome association is not confounded by any variable that is itself affected by the exposure is depicted in figure 2. Here (L) is both a confounder of the M→Y path and a mediator on the A→Y path. An example would be the association between shift work (A) and diabetes mellitus (Y), as reported by Tucker and colleagues (10). Lack of leisure-time physical activity may be considered a mediator (M). Whereas controlling for confounders (C) (eg, age and sex) is straightforward, controlling for overweight (L) may be problematic. The issue here is that overweight may be a confounder of the association between leisure-time physical activity and diabetes mellitus (individuals with overweight might find it difficult to be active in their leisure), and overweight may also be on the causal pathway from shift work to diabetes mellitus (eg, by an effect of shift work on dietary patterns or physiological processes). In the presence of such exposure-induced mediator–outcome confounders, the researcher is stuck between bad choices: not adjusting for (L) will bias the estimated effect of the mediator; adjusting for (L) will bias the estimated effect of the exposure. Hence, researchers must convince themselves that such confounding is not present. When potential confounding is a serious possibility, natural direct and indirect effects cannot be identified, regardless of whether (L) is measured or not. In such a situation, new methods are required that allow for effect decomposition in the presence of exposure-induced mediator–outcome confounders by using so-called interventional (in)direct effects, which consider potential interventions on a population level (11, 12).

Figure 2

Generic directed acyclic graph for mediation analysis including exposure-induced mediator–outcome confounders (L).

An alternative approach in mediation analysis is to quantify controlled direct effects that only rely on assumptions (i) and (iii): no uncontrolled exposure–outcome confounding and no uncontrolled mediator–outcome confounding. Moreover, some have argued that controlled direct effects are much more policy-relevant because they estimate the proportion of the total effect of the exposure on the outcome that could be eliminated by a specific intervention on the mediator (13, 14). Although controlled direct effects can still be estimated in the presence of exposure-induced mediator–outcome confounders, this does require more sophisticated methods, such as marginal structural models (15, 16) or the g-formula (17). Albeit still infrequently used, these approaches offer an important new toolbox for health research and are often easily implemented in standard statistical software (18).

To conclude, mediation analysis has gained a lot of attention in recent years and is rapidly developing with regard to approach and methods. We invite researchers to move away from traditional mediation analysis and apply the newer mediation methods in their studies. First, a critical stance is required to evaluate whether the study design and data collected permit a meaningful mediation analysis. Second, studies should explicitly address the crucial assumptions that are fundamental for a causal interpretation of mediation. Third, sensitivity analysis is helpful to assess the robustness of the results to potential violations of the underlying assumptions.