The randomized controlled trial (RCT) is considered the gold standard in evaluative medical research as causal inferences about the therapy under study can be drawn. The first RCT was reported in a 1948 issue of the British Medical Journal (BMJ) and involved the experimental treatment of pulmonary tuberculosis (1). In this trial, a particular group of English tuberculosis patients from different care facilities, comparable in the symptoms of the disease and age were included. The included patients were assigned to either a combined medicine and bed-rest therapy, or bed-rest therapy alone, based on a statistical series of random sampled numbers. Neither the patients nor the doctors involved knew the condition the patient was assigned to, later to be named a “double blind” procedure. Therapy progress was reported on forms particularly designed for this trial. Due to this design, the researchers were able to demonstrate the added value of the combined treatment over the bed-rest treatment, but only in the first three months after onset of the disease. Thereafter a deterioration emerged, probably due to resistance to the medicine under study. Many researchers have followed this example ever since. The beauty of the randomization procedure is that chance (probably) ensures that known and unknown prognostic factors are balanced over the treatment conditions and thus do not interfere with the treatment–outcome relationship. Therefore, conclusive statements about the effectiveness of the therapy can be made.

In occupational health research, a typical RCT aims, for instance, to reduce productivity loss at work (ie, a primary outcome) for a randomly chosen group of employees with medically verified upper-extremity disorder (ie, specific characteristics) via an ergonomic assessment at the worksite and a physician contacting each employee’s supervisor to discuss potential accommodations at work (ie, a multicomponent intervention). The effectiveness of the intervention is evaluated by the change in primary outcome from pre- to post-test in the intervention group relative to the change in this outcome in the reference group that did not receive the intervention (2). However, occupational health researchers are increasingly addressing questions regarding the outcomes of complex interventions. A complex intervention can consist of (i) multiple components, (ii) multiple providers and thus multiple levels, (iii) multiple locations, and/or (iv) multiple (varying) outcomes. The components, providers, locations and outcomes are interdependent and therefore the intervention can be difficult to standardize or administer uniformly (3–5). Furthermore, the context is often complex and thus nearly impossible to control entirely (6). Conducting an RCT on a complex intervention within an occupational health context is thus not always the most feasible option (7, 8).

The British Medical Research Council (MRC) recently published an updated guide that underlines the need for innovative evaluation methods (9). Although the MRC considers individual randomization in trials as the most robust design to prevent allocation bias, it is more and more acknowledged that common evaluation methods are not always practical or ethical for complex interventions (9). The RCT sometimes even offers too little information to draw meaningful conclusions for science or practice. More specifically, an RCT allows conclusions on the effectiveness of the intervention for a selected sample of individuals. Researchers have argued that because of complexity in the intervention and context, the required conditions that are needed for an efficacy trial will never occur (10). Even if an efficacy trial has been performed with success, then it still is “highly unlikely that interventions that do well in efficacy studies will do well in effectiveness studies, or in real-world applications” [(10) p1262].

In order to further develop the evidence base in occupational health there is a clear need for alternatives to the RCT. These alternatives can be formulated along two lines: experimental (most often RCT variants) and observational studies (11). Some (experimental) alternatives have been applied already in the occupational setting. The most commonly applied RCT variant is the cluster RCT, in which groups of individuals rather than individuals are randomized (12). Cluster RCT typically involve two levels, the cluster (eg, department) and their individual members (eg, worker), although trials of more than two levels (eg, company, department, and worker) also exist (12). Cluster RCT have several advantages over individual RCT in organizational interventions, namely (i) increased logistic feasibility in delivering the intervention, (ii) analysis and evaluation is conducted at the same level as the intervention is applied to (ie, the group), and (iii) contamination is avoided, which might occur when unblinded interventions are administered to some individuals but not to others in the same setting (eg, department, team, occupational physician) (13). Another commonly applied variant is the controlled trial wherein a selected group of individuals or clusters receiving the intervention is compared to a reference group that is matched on known prognostic factors (eg, age) (14). This design can be preferable to an individual or cluster RCT for practical or ethical reasons in an occupational setting. Apart from randomization, the controlled trial shares all characteristics with an RCT, but lacks the advantage of balanced unknown prognostic factors in both conditions.

However, for these alternative RCT designs, challenges remain that impede drawing causal inferences (15). The cluster RCT, for example, needs much larger numbers of participants within an experimental setting, which is often problematic in terms of feasibility and costs. The controlled trial suffers from the non-random allocation to groups, which may introduce known and unknown factors to be unbalanced between both groups. This article presents an overview of other experimental and observational study designs for occupational health interventions, starting with an overview of practical challenges in conducting an RCT, the methodological consequences of these challenges, and an empirical example. Thereafter, the key features of each design are described, including the advantages and disadvantages, and how the challenges are minimized by applying this design.

Challenges in applying RCT to evaluate complex interventions

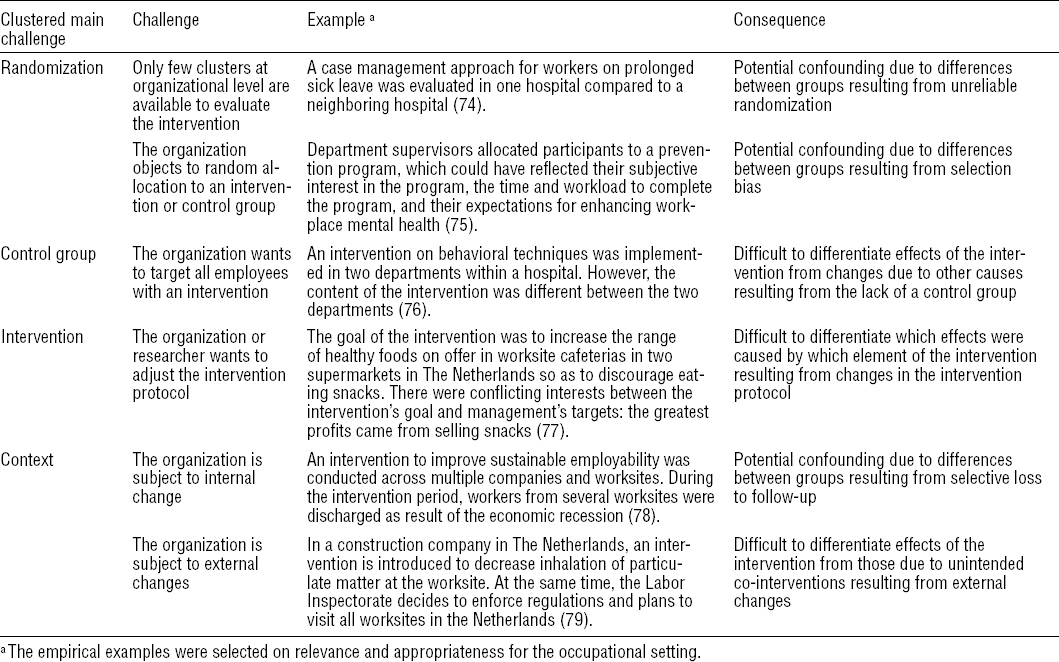

When conducting an RCT in the occupational setting, researchers faces challenges concerning the methodology (ie, randomization and control group), the intervention, and the context. Empirical examples for each challenge are given in table 1.

Methodology: randomization

Randomization of participants to the experimental condition (intervention group) or usual care/placebo condition (control group) eliminates allocation bias and internal validity threats, maximizing the probability that (un)known confounding variables will be evenly distributed over groups (16).

Challenge 1. Only few clusters at the organizational level are available to evaluate the intervention

Many workplace interventions are implemented at the group level (eg, company, facility, department, and team). The randomization procedure is then applied at the group or – in methodological terms – cluster level. However, recruiting enough clusters within a specific context is often difficult. If too few clusters are included, controlling by chance for all factors and conditions that might differ between groups is impeded. Consequently, there might be an unequal distribution of baseline characteristics between groups which introduces bias to the study [eg, (17)].

Challenge 2. The organization objects to random assignment of persons or departments

In practice, acknowledgment of a problem which is unique to a certain department (eg, high sickness absence, lagging work performance) can be a strong driver for organizations to participate in intervention research. Targeting this department with an intervention is at least in their interest and at the most a precondition to participate. Thereby, companies obstruct randomization and potential bias is thus introduced. If the organization wants to decide on the allocation of the employees to the intervention and control group, the two most important resulting biases are confounding (ie, error due to a third variable that influences the exposure–outcome relation) (16) and selection bias (ie, error due to systematic differences in characteristics such as motivation, between intervention and control group) (16), which are difficult to overcome (18).

Methodology: control group

The effect of an intervention is measured as the difference on a certain outcome between the intervention group and the control group (18). A control group is needed to distinguish between change in outcome over time due to the planned intervention, or changes over time due to unmeasured or unknown factors (eg, a policy measure).

Challenge 3. The organization wants to target all employees with an intervention

Organizations are often willing to participate in an intervention study if an acknowledged problem is to be solved (eg, high prevalence of low-back pain). Hence, the employer is motivated to demonstrate that (s)he takes the problem seriously and therefore demands that everyone should be able to participate. The employer considers it unethical to offer the intervention to a selected group only, while every employee has a potentially elevated risk. As a consequence, studies within the occupational health setting sometimes have to be performed without a control group, complicating the distinction between effect of the intervention and autonomous change over time.

Intervention

When following the guidelines of conducting an RCT, a predefined protocol for implementing and evaluating interventions is preferred in order to reach high internal validity (16). High fidelity to the protocol is furthermore important in order to understand key intervention processes and functions, and thus enable answers to the question of why the intervention is or is not effective.

Challenge 4. The organization or the researcher wants to adjust the intervention protocol

Either the organization or the researchers may want to adjust the intervention protocol to fit the specific context per cluster, thereby violating the standardization principle. For instance, the order of intervention components may be altered or the intervention components may be tailored to a specific group of workers or to specific occupational health problems. If adjustments are made within clusters, it becomes difficult to establish which intervention components or what implementation processes contributed to the effectiveness or lack thereof of the intervention, a situation sometimes referred to as a ‘black box’ (19, 20).

Context

For most occupational health interventions a double-blind-placebo trial is nearly impossible: complex interventions are dependent on the context in which they are applied. Moreover, besides the intentional adjustments described under challenge four, the intervention provider, the participants or the context may unintentionally influence the delivery and content of the intervention and thereby the outcomes (ie, information bias).

Challenge 5. The organization is subject to internal change

Many worksites and departments are subject to continuous change (21). For example, within the participating department not only the intervention under study, but also a co-intervention is delivered. In hospitals, lifting devices may be introduced to reduce mechanical load among nurses, whereas simultaneously hospital beds are replaced by high-low beds that also reduce nurses’ mechanical load. Implementing the intervention under fully controlled conditions is thereby impeded. A second example is a change in staffing: employees and managers change jobs or retire, new employees are hired, and teams are moved to other areas or downsized. Consequently, high loss to follow-up can be expected and a decreased study sample complicates reliable conclusions regarding intervention effects.

Challenge 6. The organization is subject to external change

Even when the intervention is performed under controlled conditions within the company, external changes might interfere with the intervention (21). For instance, increased enforcement of regulations by the Labour Inspectorate on the main outcome of the intervention might take place simultaneously (eg, exposure to dust containing quartz). Or, a nationwide campaign on work stress is implemented during the same period as a local stress management intervention, which motivates the control group to implement stress prevention measures as well. As a consequence of these so-called co-interventions, it becomes more difficult to distinguish autonomous change from effect, even if a control group is present.

In sum, difficulties with regard to methodology, intervention, and context may hamper the evaluation of complex occupational health interventions by means of an RCT. However, we fully agree with Kristensen (18), who stated that “there may be many good reasons for not performing a RCT in an occupational setting. But there are no good reasons for ignoring the problems created by not applying such a design.”

Alternatives for evaluating complex occupational health interventions

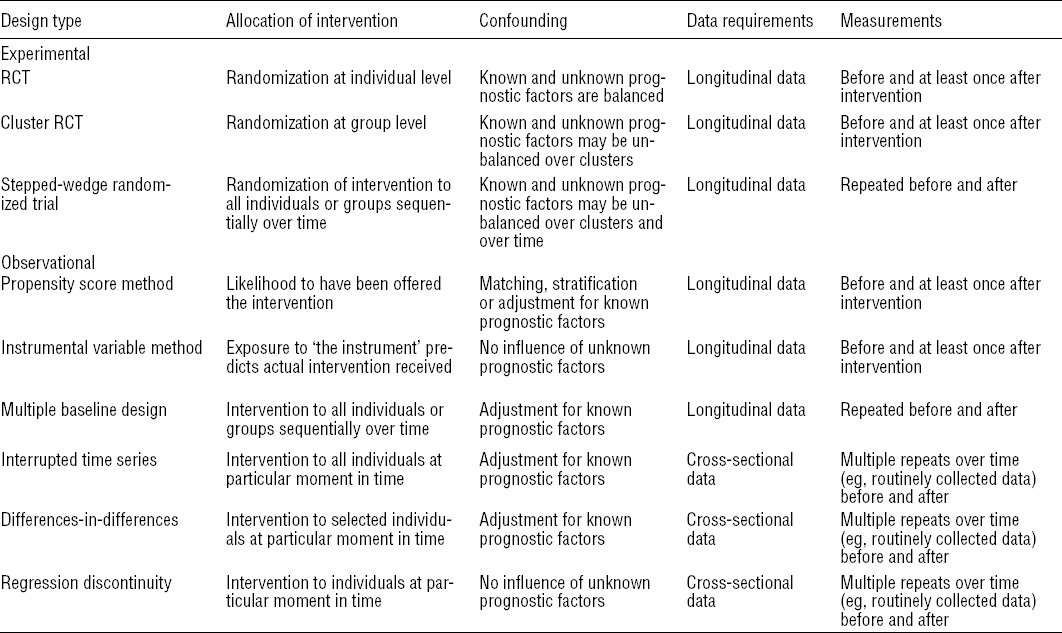

Several alternative experimental designs and designs using observational data are potentially interesting for the evaluation of complex interventions (14, 22). The core team of authors discussed a list of potential alternatives for the occupational health setting and those most relevant and applicable to the occupational health setting were selected for this article. In contribution to the current debate on alternatives to randomization in the evaluation of public health interventions (9), the selection of alternatives is described based on theoretical literature and empirical examples (tables 2 and 3).

Alternative design in experimental research

Stepped-wedge randomized trial

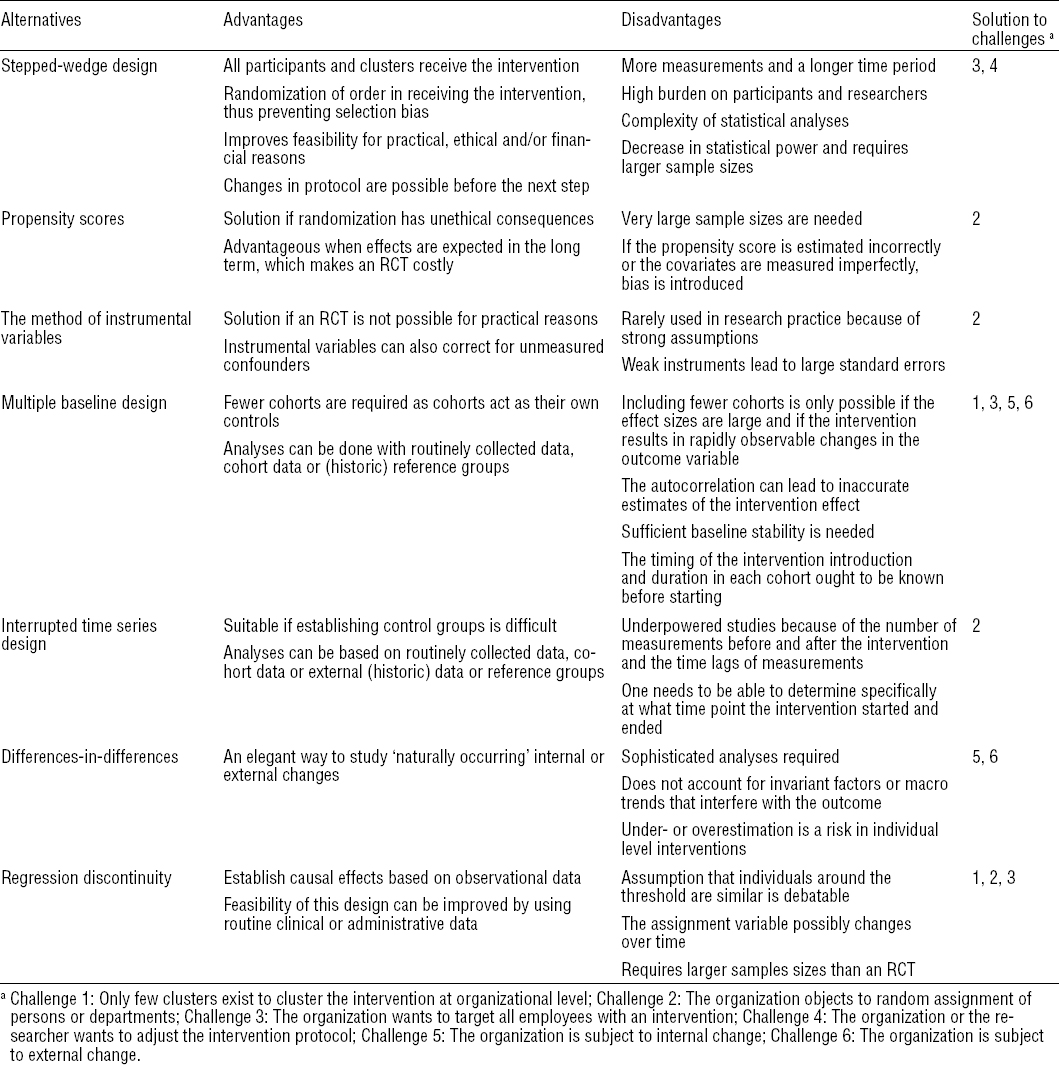

The stepped wedge randomized trial is a modification of the individual or cluster RCT in which an intervention is sequentially rolled-out to all participants over consecutive time periods (23). The order in which the individuals or clusters receive the intervention is randomized, so that at the end of the entire time period all participants have received the intervention, thereby counteracting challenge 3 (the organization wants to target all employees with an intervention) (24). The stepped-wedge design is particularly suitable if it is considered unethical to withhold the intervention from participants in a control group (25). Additionally, the stepped wedge design allows for improvement of the intervention based on lessons learned in every subsequent step (which makes it very suitable for effectiveness trials in practice) and thereby eliminates challenge 4 (the organization or the researcher wants to adjust the intervention protocol). Due to the within and between cluster comparisons at each measurement time across all time periods, this design allows for a variety of conclusions: both short-and long-term effects, fade out effects, and the natural course of the condition under study (26).

For the evaluation of a care program for staff members in dementia special care units, a stepped-wedge design was used (27). The care program consisted of tools and procedures to guide staff members through the detection, analysis, treatment and evaluation of residents’ challenging behavior. After allocating seventeen units randomly to five groups, every four months a new group started with the intervention (24 months in total). Burnout, job satisfaction, and job demands were self-assessed before the start, midway and after the implementation process. The results of the multilevel analyses of 380 staff members showed a significant positive effect for job satisfaction [β 0.93, 95% confidence interval (95% CI) 0.48–1.38], whereas no statistically significant effects were found for burnout and job demands.

Although the stepped-wedge design helps to minimize or overcome two important challenges, it introduces new challenges in itself. These challenges are firstly that larger sample sizes might be required for some outcomes since, with the increased number of groups to compare, the design may have less statistical power than the regular (cluster) RCT (28, 29). Secondly, the data collection in each time period can put a high burden on participants and researchers, which might hamper the feasibility of the study (29). The design is most feasible if data can be (partly) routinely collected at the appropriate time intervals in a reliable and valid way (28). Thirdly, statistical analysis is complex because both a random coefficient for cluster and a fixed effect for time need to be taken into account (23).

Alternative designs in observational studies

In observational studies, assignment to the experimental condition is not under the researchers’ control. The intervention and control group may differ in (observed) covariates, which could lead to biased estimates of intervention effects. Hereafter we describe alternative evaluation designs specifically developed to evaluate interventions with observational data while dealing with potential bias.

Propensity scores

The propensity scores method is a statistical matching technique that can be applied to control for confounding in evaluation studies with observational data (30, 31). The first step is to estimate propensity scores for all individuals, defined as the conditional probability of (a particular) exposure to the intervention given a number of confounding variables (32). The propensity score can be estimated with logistic regression analysis, modeling the exposure as dependent variable and the potential confounders as independent variables (33). Because some individuals with similar propensity scores are exposed to the intervention, whereas others with a similar score are not, the method assumes that actual exposure to the intervention within these individuals mimics randomization (34, 35), thereby counteracting challenge 2 (the organization objects to random assignment of persons or departments to the intervention or control group). Then, the intervention effect will be estimated using the propensity score through matching of individuals, stratification or regression adjustment (33, 36).

In a Finnish study, the propensity score was calculated for 24 000 persons in a cohort of public sector employees in municipalities and hospitals so they could be assigned to a multidisciplinary, vocational rehabilitation intervention to improve work ability (37). The propensity score was calculated using logistic regression analysis with 25 variables, including demographics (eg, gender), work characteristics (eg, work schedules), health risk indicators (eg, psychological distress), and health risk behaviors (eg, smoking status) (38). Once the propensity score was estimated, 859 employees who participated in the intervention were matched by propensity score with 2426 controls, thereby excluding all other, unmatched employees in the entire cohort. The intervention showed adverse effects on perceived work ability and no beneficial effects on work disability: the risk of suboptimal work ability was somewhat higher after short- and long-term follow-up for participants than for controls (prevalence ratio 1.23 and 1.18, respectively) (37), while an earlier study showed that incident long-term work disability was about the same for participants and controls (hazard ratio 0.98) (38).

Some conditions need to be fulfilled before propensity scores can be considered as an alternative. The method assumes that all important prognostic variables are included and the model can be built perfectly (33, 34). If the propensity score is estimated or the covariates measured imperfectly, this bias may affect the estimated intervention effect (33). One way to cope with this problem is to construct different sets of propensity scores to test its robustness (39–41).

Instrumental variables method

The method of an instrumental variable is well known in the field of economics and applied to explore causal relationships between the intervention and an outcome in longitudinal studies (42). The method relies on finding a valid prediction variable, named “the instrument”, that meets three assumptions: it (i) predicts the actual intervention received, (ii) is not directly related to the outcome, except by the direct effect of the intervention, and (iii) is not related to the outcome by any other measured or unmeasured path (42–44). Elovainio and colleagues recently investigated the association of job demands and job strain with perceived stress, psychological distress and sleeping problems among elderly care workers (45). Staffing level (ie, the ratio of the total number of nursing staff to the number of residents in the elderly care wards) appeared to be a strong instrument for both job demands and job strain, and instrumental regression analyses showed statistically significant associations with perceived stress and psychological distress. Self-reported job demands and job strain revealed the same results. An advantage of this method is that it provides a way to obtain a potentially unbiased estimate of treatment effect, even in the presence of strong unmeasured confounding (44). Since instrumental variables predict compliance to an intervention (or actual exposure) but have, by definition, no direct, independent effect on the outcome, the method of instrumental variables can reach the same effect as randomization (44) and thereby counteracts challenge 2 (the organization objects to random assignment of persons or departments to the intervention or control group).

As an example of this method, Behncke (46) investigated the effects of retirement on various health outcomes (eg, self-assessed health, chronic condition, and biological measures). Of the 1439 individuals at baseline, 192 subjects retired during the two year follow-up. Behncke assumed that reaching the state pension age affected the retirement decision, but was not directly related to health outcomes. The analyses showed that state pension age was a good predictor for retirement and thus a strong instrument. The results of the instrumental analyses showed that retirement significantly increased the risk of being diagnosed with a chronic condition.

Choosing the correct instrument for the analysis is a crucial factor in this design. Weak instruments (ie, a low correlation between the instrument variable and intervention or exposure variable) lead to large standard errors resulting in imprecise and biased results when the sample size is small (47). Therefore, this method is particularly useful for large samples and in case of moderate confounding.

Multiple baseline design

In a multiple baseline design the same intervention is implemented at different time points across groups with pre- and post-measurements (48, 49). Outcome variables are measured in all groups at baseline. Then, one or more groups receive the intervention while others remain in the control condition. After sufficient time has passed for the intervention to affect the outcome, outcomes are again measured in all groups and the intervention is introduced in the next one or more groups (48–50). This procedure minimizes challenge 3 (the organization wants to target all employees with an intervention). By sequentially introducing the intervention to groups, patterns of unexpected internal or external events can be studied; counteracting challenge 5 and 6 (the organization is subject to internal/external change). Compared to the RCT, fewer groups of participants are required in the multiple baseline design, since the group also acts as its own control (49); counteracting challenge 1 (only few clusters exist to cluster the intervention at organizational level). The design can be considered the non-randomized observational equivalent of the stepped-wedge design.

The evaluation of a behavioral contingency feedback intervention to increase attendance among 64 certified nursing assistants at three hospitals was conducted by applying a reversal (ie, ABA) multiple baseline design (51). The nine-week intervention was introduced across three groups at 16, 19, and 21 weeks after baseline measurement. All groups returned to the baseline situation (ie, A) after receiving the intervention (ie, B). The study ended with a final measurement after 39 weeks. The hospitals provided the research team with the working schedules of the participants and their sickness absence records. The repeated measures analysis of variance showed that the total number of absent days per week decreased in the intervention period [mean 0.13, standard deviation (SD) 0.17] compared to baseline (mean 0.24, SD 0.19) and increased again after returning to the baseline situation (mean 0.24, SD 0.20).

The main statistical challenge in using the multiple baseline design is the high autocorrelation of repeated measurements over time, which can lead to imprecise estimates of the intervention effect (49). Autocorrelation can be removed by Auto-Regressive Integrated Moving Average or Independent Time Series Analysis modeling (49). Another challenge is achieving sufficient baseline stability, which includes enough data points for precise estimates (52). Third, the duration of the study should be sufficiently long to monitor external variations without interference of other influences, such as seasonal effects (49). Routinely collected data are an efficient means to establish a stable baseline over an extensive time period and this may even reduce data collection costs (49).

Interrupted time series design

In the interrupted time series design, a series of measurements is performed before and after implementation of the intervention at population level in order to detect whether the intervention has a significantly greater effect than the underlying secular trend, such as an economic, market or demographic trend (eg, the change in average body height of a population over time) (53). Whether the intervention had a significantly larger effect than any underlying trend is estimated by comparing the trend in the outcome after the intervention to the trend in the pre-intervention period (54, 55). Since randomization is not a prerequisite in this design, challenge 2 (The organization objects to random assignment of persons or departments) does not apply. The design is particularly relevant when using routinely collected data, such as workers’ medical examinations, income insurance data, or workers’ compensation data (26).

Farina and colleagues investigated the impact of national legislation on minimum safety and health requirements in 1999 on injuries at construction sites (56). Total and serious injury rates in the construction sector were calculated from 1994–2005, based on an integrated database (ie, Work History Italian Panel Salute). By applying segmented regression models that take into account secular trends and correct for any autocorrelation between the single observations, the results showed that the injury rates (per 10 000 weeks worked) decreased by 0.21 (95% CI -0.41– -0.01) per year more after the intervention than in the period before.

The main methodological concerns in applying the interrupted time series design for interventions are determining both the number of measurements before and after the intervention and the necessary time lags between measurements (eg, monthly or yearly data of sickness absence) to detect autocorrelations or secular trends (26, 57). Being able to determine specifically at what time point the intervention started is a precondition for applying the interrupted time series design (58).

Differences-in-differences

Differences-in-differences methods are common practice in economics to evaluate and interpret the effect of an inevitable change (eg, policy measure). In this design, observational data are used to compare the change in the outcome of a certain group that is subjected to an intervention at a specific time point to a change in the outcome in a group that is not exposed to this intervention (59). The method relies on finding a naturally occurring control group that mimics the properties of the intervention group and is therefore expected to follow the same time trend on the outcome as the intervention group would have in absence of the intervention (60). This design does not necessarily require measurements for the same individuals in each group over time, since repeated cross-sectional surveys can also be used (61). The intervention effect is calculated by subtracting the average change over time in the outcome variable in the control group from the average change in the intervention group. The design is thus an elegant way to study the internal or external changes that were named challenges earlier (challenge 5 and 6).

The differences-in-differences approach was applied to study the impact of a quality improvement intervention on reducing work disability, disability days, and disability and medical costs (62). The intervention firstly provided financial incentives to 512 health providers for faster adoption of occupational health best practices, and secondly focused on improvement of care coordination and disability management at patient level. A control group of 2297 providers with the same characteristics as the intervention group was constructed. Two cross sections of data were made, which included 33 910 workers’ compensation claims in the baseline period (15 408 and 18 502 for the intervention and control groups, respectively) and 71 696 (31 520 and 40 176 in the intervention and control groups, respectively) claims during the follow-up period. Patients of the providers in the intervention group were significantly less likely to be off work after one year, leading to a reduction in disability days, and lower disability and medical costs.

As with the multiple baseline design and the interrupted time series design, the main methodological concern in this approach is the autocorrelation of the outcome (63). To deal with this issue, Bertrand and colleagues recommended conducting quite sophisticated analyses, such as bootstrap techniques, when the number of groups is sufficiently large (63). Also, the differences-in-differences approach does not account for invariant factors and macro trends in one or both groups that might interfere with the outcome. Lastly, at the individual level, the impact of an intervention can be under- or overestimated due to unobserved, temporary and individual-specific events (60).

Regression discontinuity

The regression discontinuity design has been well established in economics over the last two decades, but not often applied in epidemiological studies. This design exploits a threshold or “cut-off” in a continuous variable used to assign treatment or intervention, and implies that individual whose assignment values lies “just above” or “just below” this threshold belong to the same population (64, 65) and thus can be compared to each other. The causal effects can be estimated by comparing the outcome between the two groups (66), assuming that subjects are not able to manipulate the threshold value. Hence, challenge 1, 2, and 3 concerning randomization and control group are minimized.

The causal effect of extending unemployment benefit duration on unemployment duration and post-unemployment outcomes was estimated in a regression discontinuity design (67). A sharp discontinuity for age could be used, since the maximum duration of unemployment benefits increases from 12 to 18 months at the age of 45. Age was considered the threshold value, ie, the assignment variable. The study population consisted of 3432 men (44–46 years) and 3784 women (43.5–46.5 years) who were unemployed in the period from 2001–2003. By including a dummy for being exposed (ie, being >45 years old), the exit rates from employment and unemployment in the group aged >45 years were compared to the exit rates from those in the control group. The hazard rates showed that a shorter duration of unemployment benefit was associated with a higher probability of entering paid employment.

The regression discontinuity design is only appropriate when treatment is applied to a strictly defined rule, linked to a continuously measured variable (such as duration of unemployment benefit in the example above) (66). The assumption that individuals around the threshold are similar is often debatable (64). Other important factors to consider when applying this design are the possibility of change over time in the assignment variable and the unequal distribution of missing data between the two groups. Applying this design requires larger sample sizes than an RCT to achieve sufficient statistical power (68). The feasibility of this design can be improved by using routine clinical or administrative data (66).

Discussion

This article demonstrated the appropriateness of research designs other than the RCT for the evaluation of occupational health interventions. Studies wherein these research designs have been applied successfully showed that the most fundamental research question in intervention research could be answered, ie, did change actually occur as a result of the intervention? The designs were either experimental in nature (ie, stepped wedge) or observational (ie, propensity scores, instrumental variables, multiple baseline design, interrupted time series, difference-in-difference, and regression discontinuity).

Some of the alternative designs (eg, multiple baseline design) require using more complex statistical models that may contain a relatively large number of parameters in order to account for heterogeneity across clusters. In these cases, larger sample sizes might be needed than would be the case for individually based RCT. Furthermore, in any intervention evaluation, it seems worthwhile to determine systematically how implementation influenced the results by conducting a process evaluation. A well-known implementation model for public health and community-based interventions is the RE-AIM framework, which assesses reach, efficacy, adoption, implementation, and maintenance (69). Nielsen and Randall’s implementation model might be more helpful for organizational-level occupational health interventions since it additionally takes into account the mental models (ie, readiness for change and perception) of those involved (70).

Even though several researchers have acknowledged that conducting an RCT on a complex intervention within an occupational health context is not always preferable, the described alternative designs are not yet widely adopted in occupational health. This could be explained by unfamiliarity of researchers with the alternatives and their advantages and disadvantages compared to the RCT, or researchers feeling pressured to apply an RCT to maximize the possibility for publication. Hopefully, this article serves as a nudge for colleagues to consider alternative research designs for the evaluation of interventions. This article also aimed to provide the necessary information to decide on selecting the most appropriate design to answer the research question, with the highest level of internal and external validity possible and the lowest costs. Designs using observational data, for instance, are particularly useful for organizational interventions or policy measures with availability of sufficient administrative data allowing for a timely evaluation of the impact of such interventions. Observational designs may be especially applicable to research in dynamic work contexts characterized by eg, high turnover, organizational restructuring, or internal mobility. While the RCT is based on a fixed cohort whereby individuals are enrolled at the same time (ie, the start of the study) and followed up for a similar period, this may be difficult when conducting an RCT in organizations with a high annual turnover of personnel. Some alternative designs are based on dynamic cohorts whereby individuals can enter and leave the cohort at different times, eg, the designs based on repeated cross-sectional data (see table 2). This may be an additional advantage to consider an observational design over an RCT.

The societal trend of big data deserves to be mentioned at this point. Some have proclaimed the current period, with its digitized patient records in large databases, to be an “open information era” as a result of public institution’s and government’s increased transparency (71). Research can benefit from the readily accessible data this “era” yields by combining large amounts of information gathered for different purposes via different devices or media (ie, big data, so called for its variety, volume, and velocity) (72). In doing so, we can discover correlations that would not be discovered in carefully constructed evaluations, which are typically set out to test causal relations. Big data are thus especially of interest for the described alternative research designs drawing on routinely collected data.

The research designs described in this article are appropriate to evaluate the effect of an intervention that is noticeable within a period of months to several years. However, the time lag between the intervention and the consequences for health can take many more years (eg, the effect of an intervention to reduce occupational exposure to dust on diseases such as silicosis and COPD). In these situations, other designs need to be considered, such as health impact assessment (HIA), which simulates the development of illness over time, based on the combined estimate of three models on: stage of the disease, the effect of exposure on stages of disease, and population characteristics. Meijster and colleagues combined a multi-stage model of respiratory problems, exposure to flour dust and allergens, and career length and influx of new workers, to estimate respiratory health outcomes of workers in the bakery sector (73). The probability on transitioning to the next stage of disease, per unit of exposure, per year was calculated, so that incidence could be determined. The combined model demonstrated how respiratory problems develop over time and how exposure and population characteristics contributed, eg, a mean latency period of 10.3 years (95% CI 8.3–12.3) for developing respiratory symptoms in bakers was predicted (73). Even though the RCT is still preferred as design for interventions targeted at individual level, this article provides an overview of appropriate alternatives when a group level intervention is applied, or if methodological or feasibility issues are encountered in an individual RCT that obscure the intervention-outcome relationship. The choice of the most appropriate design will be guided by the specific research question, complexity of the intervention, data available, context, and costs. Moreover, researchers conducting systematic reviews should not neglect evidence from studies applying alternative research designs. They should broaden their inclusion criteria towards observational studies with appropriate designs. When these alternative designs are applied more often, further research is necessary on the development and implementation of a guideline to improve the quality of reporting non-randomized controlled trials. We highly recommend to adopt and further explore the possibilities of both experimental alternatives and alternatives based on observational data for the evaluation of occupational health interventions.