Work stress is a problem for individuals, organizations, and society at large. It poses a threat to workers’ well-being by increasing mental and physical health risks (1, 2). Work stress also contributes substantially to sickness absence (3). This is a costly problem for organizations and society in general. The annual price tag of work stress to society amounts to €20 billion in the European Union alone (4). In order to combat this problem, organizations deploy stress management interventions (SMI). Scientific evaluations of these SMI can support organizations in making an informed choice about the most effective and appropriate intervention and may also help to test theories upon which the interventions are based.

The most widely used approach to SMI evaluation is characterized by a (quasi-) experimental research design that focuses on outcomes at the level of the worker (eg, stress, burnout) (5–7). According to Kristensen (8), whether or not the intervention has had the desired effect on the targeted outcome is only one of three important questions to ask when evaluating an intervention. To interpret the effect, one should firstly assess if the intervention was carried out as intended and then assess if the intervention brought about the intended (change in) exposure or behavior. This way, a distinction can be made between program versus theory success in effect interpretation.

A way to gather information about the success or failure of an intervention program is to look at intervention implementation. This can be done by studying process variables (9–12). There are different ways of investigating the implementation process. Steckler and Linnan (9), for instance, propose a focus on intervention delivery and participation. Fleuren et al (13) assert that components, such as the sociopolitical context, and characteristics of the organization, participant (skills, knowledge, and perceived support), and the intervention itself (complexity, relative advantage) are also important for implementation. Finally, Nielsen and Randall (11) suggest that mental models (pertaining to constructs such as readiness for change) should be added to existing process evaluation frameworks.

Despite increasing support for the incorporation of process factors into the evaluation of SMI in the last 10–15 years, there is still limited consensus on which process variables should be assessed. In addition to frameworks that offer suggestions for the use of certain process variables, insight into current practice could also support future process evaluations of SMI. More overviews that stress the importance of process measures in organizational-level intervention evaluations do exist. Egan and colleagues (14), for example, provide a review of implementation appraisal of complex social interventions, concluding that implementation and context are crucial for impact assessment of these interventions. To the authors’ best knowledge, a decade ago, Murta et al (15) have provided the only review describing which process variables are used in SMI evaluation research. In accordance with the aforementioned different perspectives on process evaluation, they observed great heterogeneity in variables and designs researchers use for SMI process evaluation. Murta and colleagues made this observation using a restricted selection of process variables (15). A limitation of this restricted selection is that publications reporting other process variables could have been neglected. Building on their research, a broader approach can leave room for more current frameworks to be recognized in process evaluation practice. The aim of this review was to explore which process variables are used in SMI evaluation research.

Methods

A systematic literature review was performed to investigate which process variables are reported in SMI evaluation research. Components from the PRISMA statement (16) were used in reporting this systematic review.

Search and study selection

Studies were eligible for inclusion if they (i) reported on an SMI directed at paid workers aged ≥18 years, (ii) reported a process evaluation of the intervention (at least one process variable assessed), (iii) were published in a peer-reviewed journal (conference abstracts, books and design protocols were excluded), and (iv) were written in English or Dutch. An SMI was defined as an organizational intervention focusing on individual or organizational changes, targeted to prevent or reduce stress in employees at the primary or secondary prevention level. A process variable was defined as any measure included in the evaluation study that is hypothesized to be associated with the process of SMI implementation.

Together with a library search specialist, the following databases were searched from inception to October –December 2014: PubMed, PsychINFO (October 8, 2014), ISI/Web of Science, Embase (October 24, 2014), Proquest (December 3, 2014), EconLit (December 5, 2014), and Ebsco/Cinahl (December 11, 2014). For every database, the search was adapted to the appropriate terminology specific to that database, using synonyms and closely related words (for the complete search, see the Appendix, www.sjweh.fi/data_repository.php). If a process evaluation was mentioned in design protocols then the first author searched the electronic literature databases and contacted the authors to identify additional studies.

The first author removed the duplicates from the records identified. Then, the first and the second author independently screened titles and abstracts of all remaining records, selecting articles for fulltext inspection, using the aforementioned eligibility criteria. If at least one of the two authors had selected a record for fulltext inspection then it was retrieved. Subsequently, both authors independently assessed the remaining selection of articles for inclusion. There was an independent consensus for in- and exclusion of fulltext articles of 72%. Remaining discrepancies were resolved with face-to-face deliberation. When this did not lead to consensus, one of the co-authors was consulted to make a final decision.

Data extraction

A template was constructed, containing a list of data to be extracted from the included articles. This template was used independently by the first and second author by applying it to two, randomly selected articles. Then, they compared the data they had extracted and modified and further specified the template towards consensus. Random selection and coding of studies by both the first and second author was repeated for 20% of all articles after which a clear coding format was obtained.

The template used for data extraction contained three main component categories: (i) study and intervention characteristics, (ii) process evaluation methods, and (iii) process variables. Intervention characteristics were adapted from Murta and colleagues (15). The process evaluation methods components were adapted from Wierenga and colleagues (17). The specific components are listed in table 1. Process variables were coded using a list of concepts derived from process evaluation literature (9, 11, 13, 15, 17). During coding, the researchers used the list of concepts as a frame of reference, as a starting point. When necessary, the researchers diverged from this list so as not to exclude variables that were not on the list but were used as process variables. Data were collected at the level of the employee (micro level), the level of the supervisor, manager, or department (meso level), and at the level of the CEO, organization, or sector (macro level). For every process variable, it was assessed how many articles reported data collected at the micro, meso, or macro level.

Table 1

Studies reporting process variables. [CR=company records; DC=data collection; N=number of intervention participants; ON=observation notes; PA=participatory approach; PE=reference made to process evaluation literature; Pre/during/post=before/during/after the intervention; Ref=reference to literature

| Author | Country | Sector | N | PA a | PE | DC moment | Level of DC b | DC c | Method of DC |

|---|---|---|---|---|---|---|---|---|---|

| Albertsen et al, 2014 (35) | Denmark | Healthcare | 840 | No | No | Pre, post | Micro, meso | Qualitative | Focus groups, interviews |

| Aust et al, 2010 (26) | Denmark | Healthcare | 399 | Yes | No | During, post | Micro, meso | Combined | ON, questionnaire, reports |

| Biron et al, 2010 (29) | UK | Utilities | 205 | Yes | Yes | Pre, during, post | Micro, meso | Combined | Interviews, ON |

| Bourbonnais et al, 2006 (27) | Canada | Healthcare | 492 | Yes | No | During | Micro, meso | Qualitative | Interviews, ON |

| Bruneau et al, 2004 (36) | UK | Healthcare | 18 | No | No | During | Micro | Quantitative | Questionnaire |

| Bunce et al, 1996 (37) | UK | Healthcare | 118 | No | Yes | Post | Micro | Quantitative | Questionnaire |

| Burton et al, 2010 (38) | Australia | Education | 18 | No | No | During, post | Micro | Combined | ON, questionnaire, CR |

| Coffeng et al, 2013 (18) | NL | Financial | 306 | Yes | Yes | Post | Micro, meso | Combined | ON, questionnaire, CR |

| Cohen-Katz et al, 2005 (39) | USA | Healthcare | 25 | No | No | During, post | Micro, macro | Combined | Focus groups, interviews, ON, questionnaire, CR |

| Falck et al, 1984 (40) | USA | Education | 32 | No | Yes | During | Micro, meso, macro | Combined | Conference, ON, questionnaire |

| Günüsen et al, 2009 (23) | Turkey | Healthcare | 72 | No | No | Post | Micro | Qualitative | Interviews |

| Hasson et al, 2014 (20) | Sweden | Mixed | - d | No | Yes | During | Meso, macro | Qualitative | Interviews |

| Hasson et al, 2014 (41) | Canada | Financial | 1714 | No | Yes | Post | Micro | Quantitative | Questionnaire |

| Heaney et al, 1993 (42) | USA | Manu-facturing | 176 | Yes | No | During, post | Micro, meso, macro | Combined | Interviews, ON, questionnaire |

| Helms-Lorenz et al, 2013 (43) | NL | Education | 192 | Yes | No | Post | Micro | Quantitative | Questionnaire |

| Ipsen et al, 2014 (19) | Denmark | Mixed | 129 | Yes | Yes | During, post | Micro, meso, macro | Combined | Interviews, ON, questionnaire, workshop |

| Jeffcoat et al, 2012 (22) | USA | Education | 121 | No | No | During, post | Micro | Quantitative | Questionnaire, CR |

| Jenny et al, 2014 (34) | Switzerland | Mixed | 3532 | Yes | Yes | During, post | Micro, meso | Combined | Interviews, questionnaire, CR |

| Kawai et al, 2010 (44) | Japan | Mixed | 168 | No | Yes | During | Micro | Quantitative | Questionnaire |

| Keller et al, 2012 (45) | USA | Healthcare | 60 | No | No | Pre, during, post | Micro | Quantitative | Questionnaire |

| Landsbergis et al, 1995 (46) | USA | Healthcare | 63 | Yes | No | Post | Micro | Qualitative | Interviews |

| Leonard et al, 1999 (47) | Australia | Police | 30 | No | No | Post | Micro | Qualitative | Interviews |

| Mellor et al, 2011 (48) | UK | Mixed | - d | Yes | No | During, post | Micro, meso, macro | Combined | Interviews, CR |

| Mellor et al, 2013 (49) | UK | Mixed | - d | Yes | No | During | Meso | Combined | Interviews, CR |

| Millear et al, 2008 (50) | Australia | Utilities | 28 | No | Yes | Post | Micro, meso | Combined | Questionnaire, records |

| Nielsen et al, 2006 (51) | Denmark | Healthcare | 144 | Yes | Yes | During, post | Micro, meso | Combined | Focus groups, group meetings, interviews, ON, reports, questionnaire |

| Nielsen et al, 2007 (52) | Denmark | Mixed | 538 | Yes | Yes | During, post | Micro, meso | Quantitative | Questionnaire |

| Nielsen et al, 2012 (53) | Denmark | Healthcare | 583 | No | Yes | Post | Micro | Quantitative | Questionnaire |

| Petterson et al, 1998 (54) | Sweden | Healthcare | 3506 | Yes | No | - d | Micro, meso | Quantitative | Questionnaire |

| Randall et al, 2007 (24) | UK | Healthcare | -f | Yes | Yes | Post | Micro | Qualitative | Interviews |

| Randall et al, 2009 (31) | Denmark | Healthcare | 551 | No | Yes | Post | Micro, meso | Qualitative | Focus groups, interviews |

| Renaud et al, 2008 (21) | Canada | Financial | 656 | No | No | Post | Micro, meso | Combined | Interviews, questionnaire |

| Reynolds et al, 1993 (55) | UK | Healthcare | 92 | No | Yes | During, post | Micro | Quantitative | Questionnaire |

| Reynolds et al, 1993 (56) | UK | Healthcare | 92 | No | Yes | During, post | Micro | Quantitative | Questionnaire |

| Saksvik et al, 2002 (25) | Norway | Mixed | 685 | No | Yes | Pre, post | Micro, meso | Qualitative | Interviews, ON, reports |

| Schwerman et al, 2012 (57) | USA | Healthcare | 3930 | No | No | Post | - d | Quantitative | Questionnaire |

| Sorensen et al, 2014 (28) | Denmark | Mixed | 163 | Yes | Yes | During, post | Micro, meso, macro | Combined | Interviews, ON, questionnaire, CR |

| Swain et al, 2014 (58) | NZ | Healthcare | 56 | No | No | Post | Micro | Qualitative | Questionnaire |

| Van Bogaert et al, 2014 (59) | Belgium | Education | 170 | Yes | No | Post | Micro | Quantitative | Questionnaire |

| Van Wingerden et al, 2013 (60) | NL | Education | 50 | No | No | During, post | Micro | Qualitative | Interviews |

| Weigl et al, 2012 (32) | Germany | Healthcare | 17 | Yes | No | Post | Micro | Qualitative | Interviews |

| Weigl et al, 2013 (61) | Germany | Healthcare | 19 | Yes | Yes | Post | Micro | Qualitative | Interviews |

| Westlander et al, 1995 (62) | Sweden | Telecom | 300 | Yes | Yes | During, post | Micro, meso, macro | Combined | Group meetings, interviews, questionnaire, CR |

| Van Berkel et al, 2013 (63) | NL | Research | 257 | No | Yes | Pre, during, post | Micro, meso | Combined | Interviews, questionnaire |

a Cooperation of different stakeholders in the assessment, targeting, and prevention of work stress.

Analyses

The Nielsen and Randall model for process evaluation (11) was used to cluster the process variables retrieved because the model was developed especially for organizational-level interventions and it provides the opportunity to take a broad perspective to process evaluation. Using this model, mediating and moderating factors of implementation can be detected. It was deliberated under which cluster a process variable should go, first with two of the co-author and then with all authors of this review. After each deliberation, the arrangement was adjusted according to the feedback received. The main clusters were context, intervention, and mental models. The content of the three clusters is in accordance with the three central themes of the Nielsen and Randall model for process evaluation (11). Context pertains to situational aspects that affect organizational behavior and functional relationships between variables, and contains hindering and facilitating factors. The intervention cluster refers to aspects of intervention design and implementation that determine the maximum levels of intervention exposure that can be reached, and contains the sub-clusters initiation, intervention activities, implementation, and implementation strategies. The mental models cluster refers to underlying psychological aspects that may help explain stakeholders’ behavior in and reaction to the intervention. The mental models cluster contains the sub-clusters readiness for change, perceptions, and changes in mental models.

Results

Study selection

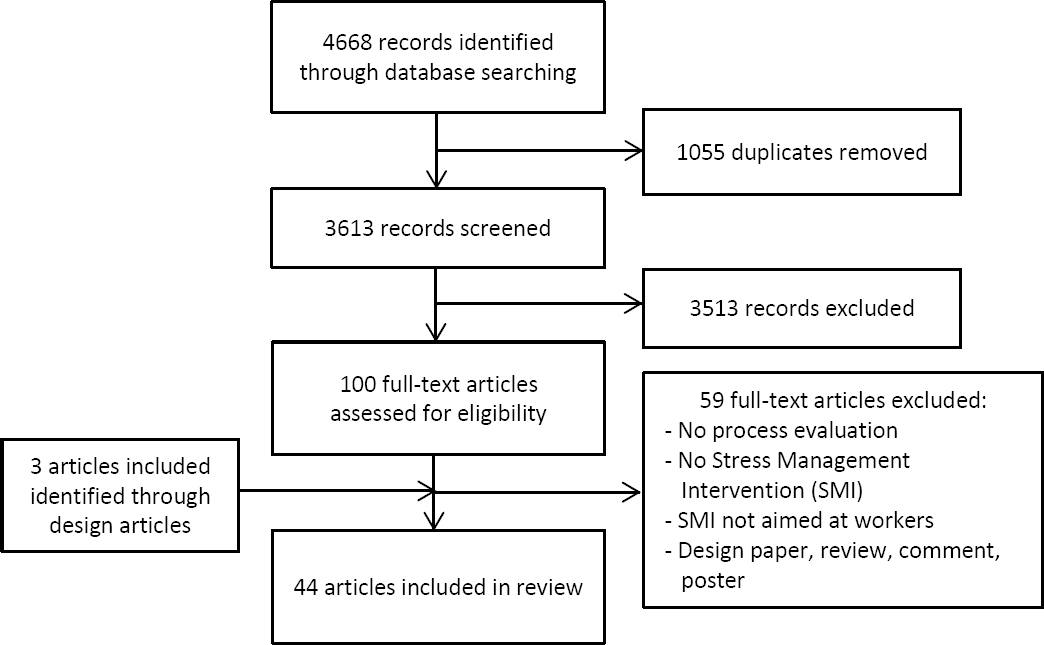

The database search identified 4668 records. After duplicates were removed, an initial screening of titles and abstracts of the 3613 remaining records produced 100 potentially relevant publications. After screening of the 100 retrieved fulltext articles, 59 were excluded. The three main reasons for exclusion were: no process evaluation, no SMI, no results presented (design or protocol paper). Additionally, three eligible articles were identified through design papers. Finally, 44 articles met the selection criteria, and were included in this review (Figure 1). Two studies were reported in more than one of the included articles, so 42 studies are represented in this review.

Study and intervention characteristics

Table 1 presents the most important characteristics of the studies in the context of this review. Of the 42 reported studies, 27 were conducted in Europe (64%), 10 in North America (24%), 4 in Australia (10%), and 1 in Asia (2%). Most studies were conducted in the healthcare sector (45%), followed by education (13%). Of the 42 studies, 9 (21%) were conducted in a mixed set of organizations in more than one sector. More than half of the interventions (55%) had a participatory format. A participatory approach is characterized by cooperation of different stakeholders (eg, employees, managers, intervention providers) in the assessment, targeting, and prevention of work stress. Intervention duration ranged from 1–312 weeks, with most intervention durations (64%) not exceeding one year.

Half (50%) of the 44 articles did not contain any reference to process evaluation literature in the introduction or methods section. In 20 articles, process evaluation data were collected at more than one moment. Collection of process evaluation data mostly took place post (84%) or during the intervention (55%). In five cases, process evaluation data were collected pre-intervention (11%). In most articles (93%), process evaluation data were collected at the micro level. In 22 and 8 articles, process evaluation data were collected at the meso and macro level, respectively. All articles that reported only quantitative data for process evaluation used a questionnaire for the process. In the articles that reported a qualitative or a combined approach, (group) interviews were mostly used for process evaluation.

Process variables

Table 2 shows all 47 process variables that were retrieved. Some of the most striking findings are discussed below. The context cluster contained 2 process variables, the intervention cluster 31, and the mental models cluster 14. The intervention sub-cluster initiation contained 3 process variables, intervention activities 8, implementation 8, and implementation strategy 12. The mental models sub-cluster readiness for change contained 4 process variables, perceptions 7, and changes in mental models 3. For every process variable, the general level of data collection is reported.

Table 2

Process variables reported in the included articles.

| Clusters and concepts | Concept descriptions a | Studies (N) | Level of data collection b | References | ||

|---|---|---|---|---|---|---|

|

|

||||||

| Micro | Meso | Macro | ||||

| Context | ||||||

| Context | Contextual factors affecting the implementation of intervention | 19 | 18 | 13 | 6 | (18–20, 23–25, 27–29, 34, 35, 37–39, 46, 48, 51, 62, 63) |

| Barriers/facilitators | Factors that hinder or help implementation of intervention | 6 | 6 | 6 | 2 | (18, 19, 25, 48, 51, 63) |

| Intervention | ||||||

| Initiation | ||||||

| Motivation | Motivation to use intervention | 7 | 6 | 7 | 2 | (20, 25, 26, 29, 34, 40, 63) |

| Initiation | Activities related to the initiation of intervention | 3 | 2 | 3 | 3 | (19, 20, 48) |

| Ownership | Extent to which intervention stakeholders consider themselves drivers of implementation | 2 | 2 | 2 | 1 | (19, 29) |

| Activities | ||||||

| Responsibility | Responsibility of intervention stakeholders for implementation | 10 | 8 | 10 | 3 | (19, 20, 25, 28, 29, 34, 35, 49, 51) |

| Integration | Extent to which intervention is integrated into daily work processes | 6 | 4 | 5 | 3 | (19, 20, 24, 28, 34, 49) |

| Appropriateness | Extent to which stakeholders consider intervention appropriate for the organization | 4 | 4 | 3 | 3 | (19, 23, 28, 48) |

| Monitoring | Monitoring progress of intervention implementation | 3 | 3 | 2 | 1 | (19, 34, 61) |

| Adoption | Extent to which stakeholders adopt intervention initiatives | 2 | 2 | 2 | 1 | (28, 34) |

| Maintenance | Extent to which the intervention is used after implementation | 2 | 2 | 1 | - | (34, 59) |

| Comfort | Extent to which stakeholders feel comfortable with requirements | 1 | 1 | 1 | - | (29) |

| Tailoring | Extent to which intervention is tailored to user needs | 1 | 1 | 1 | - | (63) |

| Implementation | ||||||

| Dose received | Extent to which users actively engage in intervention | 13 | 12 | 10 | 4 | (18–22, 26, 29, 34, 37, 38, 40, 45, 63) |

| Participation | Participation in intervention | 12 | 12 | 8 | 4 | (21, 24, 25, 28, 39, 42, 48, 52–54, 61) |

| Outcome | Extent to which stakeholders consider implementation of intervention successful | 8 | 7 | 5 | 4 | (19, 20, 29, 32, 40, 43, 61, 62) |

| Dose delivered | Amount of intended intervention components delivered to users | 8 | 8 | 7 | 3 | (18, 19, 24, 26, 28, 50, 51, 62) |

| Fidelity | Extent to which the intervention is delivered as planned | 7 | 7 | 7 | 2 | (18, 25, 40, 50, 51, 62, 63) |

| Reach | Proportion of target population that participates in intervention | 5 | 5 | 5 | - | (18, 27, 29, 34, 63) |

| Exposure | Extent to which intervention users are exposed to intervention | 4 | 4 | 2 | - | (21, 24, 31, 41) |

| Recruitment | Procedures used to recruit intervention users | 4 | 4 | 3 | 1 | (18, 34, 40, 43) |

| Implementation strategy | ||||||

| Support | Intervention stakeholders’ support for intervention | 24 | 22 | 15 | 5 | (19–21, 23–29, 32, 34, 35, 40, 46, 49, 51, 55, 56, 59–63) |

| Information /communication | Information and communication about intervention | 15 | 13 | 12 | 7 | (19, 20, 24, 27, 28, 34, 40, 42, 44, 48, 49, 52, 61–63) |

| Involvement | Involvement of stakeholders in intervention and/or intervention activities | 11 | 10 | 7 | 3 | (24, 25, 28, 31, 42, 48, 49, 54–56, 59) |

| Resources | Resources (eg, money, time, manpower) available for intervention | 7 | 6 | 6 | 2 | (19, 25, 34, 48, 49, 60, 63) |

| Roles | Clarity of stakeholders’ roles within intervention | 6 | 5 | 6 | 2 | (19, 20, 25, 26, 35, 51) |

| Expertise | Extent to which users have experience with specific intervention activities (eg, risk assessment) | 3 | 3 | 3 | 2 | (28, 35, 48) |

| Attractiveness | Extent to which intervention materials are attractive for users | 1 | 1 | 1 | - | (63) |

| Cooperation | Extent to which intervention stakeholders work together | 1 | 1 | - | - | (32) |

| Independency | Extent to which the project agenda is independent | 1 | 1 | - | - | (61) |

| Coherence | Extent to which intervention elements are related to each other | 1 | 1 | 1 | - | (34) |

| Responsiveness | Extent to which intervention provider is responsive to stakeholders | 1 | 1 | 1 | - | (63) |

| Scale | Scale to which intervention is implemented in the organization | 1 | 1 | 1 | 1 | (28) |

| Mental models | ||||||

| Readiness for change | ||||||

| Awareness of problem | Awareness of challenges related to either work stress or implementation of the intervention | 7 | 7 | 5 | 2 | (28, 34, 48, 54–56, 63) |

| Readiness for change | Extent to which intervention stakeholders are ready for change | 6 | 5 | 6 | 1 | (19, 29, 31, 49, 51, 63) |

| Project fatigue | Feeling that organization has initiated too many surveys/projects | 3 | 2 | 3 | 1 | (25, 48, 49) |

| Intention to act | Intention to participate in intervention program | 1 | 1 | - | - | (44) |

| Perceptions | ||||||

| Attitudes and perceptions | Attitudes and perceptions of users related to the intervention | 30 | 29 | 18 | 7 | (19–21, 23, 25–29, 31, 32, 34–41, 46, 48, 51, 52, 54–56, 58, 60, 62, 63) |

| Satisfaction | Satisfaction with the intervention | 6 | 6 | 5 | - | (18, 21, 26, 35, 47, 63) |

| Engagement | Engagement of stakeholders in intervention implementation | 4 | 3 | 4 | 2 | (20, 21, 25, 28) |

| Trust | Trust between stakeholders involved in intervention | 3 | 3 | 2 | 1 | (19, 23, 63) |

| Enjoyment | Enjoyment in intervention | 2 | 2 | 1 | - | (44, 50) |

| Enthusiasm | Enthusiasm about the intervention | 1 | 1 | 1 | - | (25) |

| Influence | Influence on the contents of the intervention | 1 | 1 | 1 | - | (52) |

| Changes in mental models | ||||||

| Effectiveness beliefs | Beliefs about effectiveness of the intervention | 14 | 11 | 5 | 2 | (20, 23–25, 32, 39, 41, 46, 49, 50, 57, 59, 60, 63) |

| Perceived impact | Perception about impact of the intervention | 11 | 11 | 6 | 2 | (21, 24, 34, 39, 45, 51–56) |

| Expectations | Expectations of stakeholders about the intervention | 6 | 6 | 5 | 1 | (23, 25, 26, 28, 34, 35) |

Attitudes and perceptions of intervention users were reported most frequently (30 articles), followed by support (N=24), context (N=19), information/communication (N=15), and effectiveness beliefs (N=14).

Clusters

Context

Both a cluster and process variable, context was the third most reported variable. Coffeng and colleagues (18), for instance, reported a reorganization at the beginning of the intervention period as an example of context. Another contextual factor they reported was the fact that three months before the intervention project started, another intervention to improve the work environment had been piloted. Of 19 articles reporting context, 18 reported data that were collected at least at the micro level. The other process variable in this cluster was barriers and facilitators, which was reported six times. Ipsen et al (19) gave an example of both: making the wrong changes slows the process (barrier) and the intervention constitutes a collective process (facilitator).

Intervention

The first sub-cluster of the intervention cluster was initiation, which contains the process variables motivation, initiation of the intervention, and ownership. Motivation was reported in 7 articles, all of which collected data at least at the meso level. In the second sub-cluster, intervention activities, responsibility was reported most and refers to the extent to which different stakeholders are accountable for carrying out intervention actions. Hasson and colleagues (20) found that senior management differed with human resource professionals about who was responsible for involving line managers in the intervention. In the third sub-cluster, implementation, dose received was the most reported process variable, examples of which included self-reported participation in intervention modules (21) and quiz completion of participants across several quizzes during the intervention (22). Data collection at the micro level was dominant in this sub-cluster. For the sub-cluster implementation strategy, support was the most-reported process variable, examples of which include support from management toward employees to attend intervention sessions (23) and the visibility of senior management’s involvement in the intervention (24). Also in this sub-cluster, 11 articles reported the process variable involvement, which was, for example, described as the extent to which stakeholders took part in the development of a plan of action (25).

Mental models

In mental models, the first sub-cluster was readiness for change, of which awareness of problem/intervention was the most reported process variable. Readiness for change was reported in 6 articles, all measured at the meso level. The second sub-cluster, perceptions, contained the most-reported process variable in all 44 articles: attitudes and perceptions of intervention users, examples of which included criticism of employees towards intervention consultants (26) and the belief of employees that management did not take their needs into account (27). In the 30 articles reporting attitudes and perceptions of intervention users, almost all reported data were collected at least at the micro level. Engagement was found in articles that primarily reported data collection at the meso level. For engagement, Sorensen and Holman (28) reported differences in working groups in the extent to which they were able to include employees in the implementation process. In the third and last sub-cluster, process variables that represent changes in mental models were included. In this sub-cluster, effectiveness beliefs were reported most and are most often investigated at the micro-level.

Discussion

The aim of this systematic review was to explore which process variables have been used in SMI evaluation research. In the 44 articles, we found 47 process variables, which were divided into three clusters: (i) context contained 2 variables, (ii) intervention contained 31 variables, and (iii) mental models contained 14 variables. There was great variety in the process variables assessed, but the three most-reported were attitudes and perceptions of intervention users (mental models cluster), support (intervention cluster), and context (context cluster). Many process variables were different from those reported by Murta and colleagues (15). This systematic review revealed that relatively few studies contained theoretical frameworks to guide process evaluations. Half of the articles did not contain any reference to process evaluation literature in the introduction or the methods section.

Different frameworks for process evaluation are available. Two in particular were present in the studies included in this systematic review, and each provide a different perspective on process evaluation. The first framework, proposed by Linnan and Steckler (9), focuses on implementation. In the findings of the present review, the framework was represented by process variables such as dose delivered (the extent to which the intervention was made available by its providers), dose received (the extent to which the target population actively uses or engages in intervention facilities and activities), and fidelity (the extent to which the intervention was delivered as planned). Evaluating implementation answers the question “Was the intervention carried out as intended?”. However, this is only one of three important questions for intervention evaluation (8). Focusing solely on implementation in the process evaluation leaves unanswered the question “Did the intervention bring about the intended (change in) exposure/behavior?”. The second framework, a model proposed by Nielsen and Randall (11), takes a broader view. It does not focus on implementation alone but also incorporates concepts such as initiation, implementation strategy, and mental models. By taking this broader view of process evaluation, information could also be gathered about the (change in) exposure or behavior. By adding mental models, for example, an explanation could be found for participants’ motivation to take part in intervention activities or make use of intervention facilities. This was illustrated by Biron et al (29), who reported that managers failed to use a stress risk assessment tool (ie, dose received) because they did not feel that stress was a problem (ie, attitudes and perceptions of intervention users).

A problem with this broader approach is that it might blur the lines between process variable and effect outcome. An attitude or perception that seems to influence intervention participation (and implementation) can be regarded as a process variable. Alternatively, maintaining or changing an attitude or perception can be an intermediate effect of an intervention, in which case it may be more accurately described as an effect outcome. An example of a process variable that could also be an intermediate effect is communication. Communication about the intervention may be important for implementation (the process thereof), but an intervention can also change the way different stakeholders interact, leading to improved communication (intermediate effect). The dilemma that arises is in which part of the intervention evaluation should this information be gathered and reported in the context of the process or the effect evaluation? A way to make this decision is to establish beforehand whether the variable is part of the underlying theory or working mechanism behind the intervention (8). If this is the case, the variable should be regarded as an intermediate effect and measured as part of the effect evaluation. A systematic way to take intermediate effects into account is to formulate a program theory (10). A program theory states under which conditions researchers expect proximal changes to occur (30) but seems to be missing in many of the included studies. Program theory evaluation can provide quantitative outcomes, which can be related to intervention effect outcomes. Quantitative variables can give insight into the extent to which the intervention was used (eg, dose received), whereas qualitative data can provide more in-depth information (eg, barriers and facilitators). Sometimes, researchers might not yet be aware of certain intermediate effects. In that case, a qualitative process evaluation offers room for exploration, catering more to the practical nature of the applied research setting of interventions, in which fewer factors can be controlled than in a laboratory setting. This may explain why half of the articles contained reports of process variables but did not mention the use of any theoretical framework for their measurement.

Strengths and limitations

A strength of this review is our elaborate and thorough selection of studies; two independent researchers searched seven databases and systematically inspected 3613 titles and abstracts. Second, the background information on interventions and methods provided unique insight into specific circumstances in which process variables were assessed. Finally, careful deliberation resulted in a clustering structure tailored to the findings.

Some limitations should be considered when interpreting the results. First, as this is an explorative review, we chose to use broad definitions of process variables and evaluation. Consequently, more generally defined process variables were reported more often than specifically defined ones. One could argue that a study reporting only one broadly defined process variable can hardly be called a process evaluation. However, using broad definitions served the exploratory goal of this review, in which we aimed at inclusion rather than exclusion. Resulting from this, many process variables found were not part of the preliminary design of the study (ie, they were not part of the theoretical framework used for the evaluation of the intervention). A second limitation is the fact that during data extraction, interpretation was sometimes necessary to tease out the process variables. This meant that not every variable could be extracted literally. For example, employee readiness (31) was coded as readiness for change. To enhance the coding format and curb possible observer effects, the first and second author coded 20% of the included articles independently. Coding was completed only after consensus was reached on the first 20%. Despite the relatively large number of process variables found, it is possible that some variables were missed, especially because there was great heterogeneity in the naming of process variables.

Implications for research and practice

Both the heterogeneity of process variables used and lack of the use of a (standard) framework in process evaluation limit the possibility to compare results and build on previous experiences. This hinders the advancement of process evaluation theory development and limits the possibility to advise organizations about what is important for successful implementation of SMI. Future process evaluations of SMI should be guided by a standardized, comprehensive framework that goes beyond assessing implementation only. The Nielsen and Randall (11) model of process evaluation provides a good starting point. Standardization would also be supported by the systematic use of a program theory, which would obligate researchers to measure if conditions for changes in behavior or exposure were in place and assess if intermediate stages were reached (30).

In most cases, process evaluation data were collected after intervention implementation and at the micro level (ie, at the level of the employee). As argued by Nielsen and Randall (11), retrospective evaluation may not capture changes in the process, and (in non-randomized controlled trial settings) does not provide the opportunity to take corrective action during intervention implementation should gaps emerge. Failing to collect information from stakeholders other than employees (micro level) also means that differences in perspectives among stakeholders might be overlooked. Many studies show, however, that for implementation success, support from other stakeholders is important (19, 29, 32–34). In future process evaluations, researchers could place more emphasis on the collection of process data at different levels.

It should be noted that even though there have been substantial developments in the research field of process evaluations (for instance, the inception of the new journal Implementation Science in 2006), advancements should still be made in relating available process data to effect outcomes. This way, it could be assessed which process variables are central to successful implementation and predictive of intervention success.

Concluding remarks

This review complements the process evaluation literature by giving insight into the use of process variables in SMI evaluation research. It revealed that there still is great heterogeneity in the methods and process variables used. It also found that many process variables were used in SMI evaluations other than those reported earlier and that, in many cases, no theoretical framework or program theory was used to guide measurement of process variables. In most cases, process variables were measured at the level of the employee and post intervention. Future process evaluations of SMI could benefit from data collection from different stakeholders (eg, employees, management, CEO) and at different times (before, during, and after the intervention). Also, the use of a theoretical framework could support a broader approach to process evaluation and may lead to a more standardized way of assessing intervention implementation.